Towards an Inclusive and Embodied Metaverse Locomotion

While VR increasingly enables immersive digital experiences, most locomotion systems are implicitly designed for users who can freely stand, walk, and rotate in physical space. This creates barriers for users with limited mobility, fatigue, or small play areas. This work reframed the problem from providing alternatives to ensuring equivalently embodied experiences regardless of physical ability or space. The goal was not to modify the metaverse to fit accessibility constraints, but to redesign interaction so that immersion and agency remain intact under all conditions.

Learning Natural Body Cues for Accessible VR Locomotion

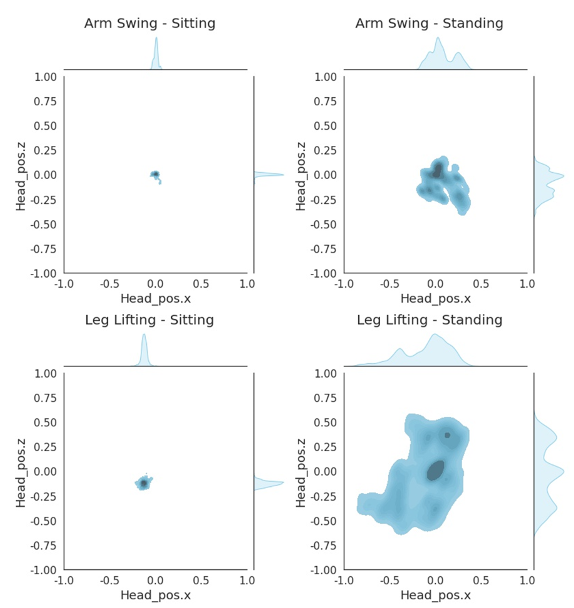

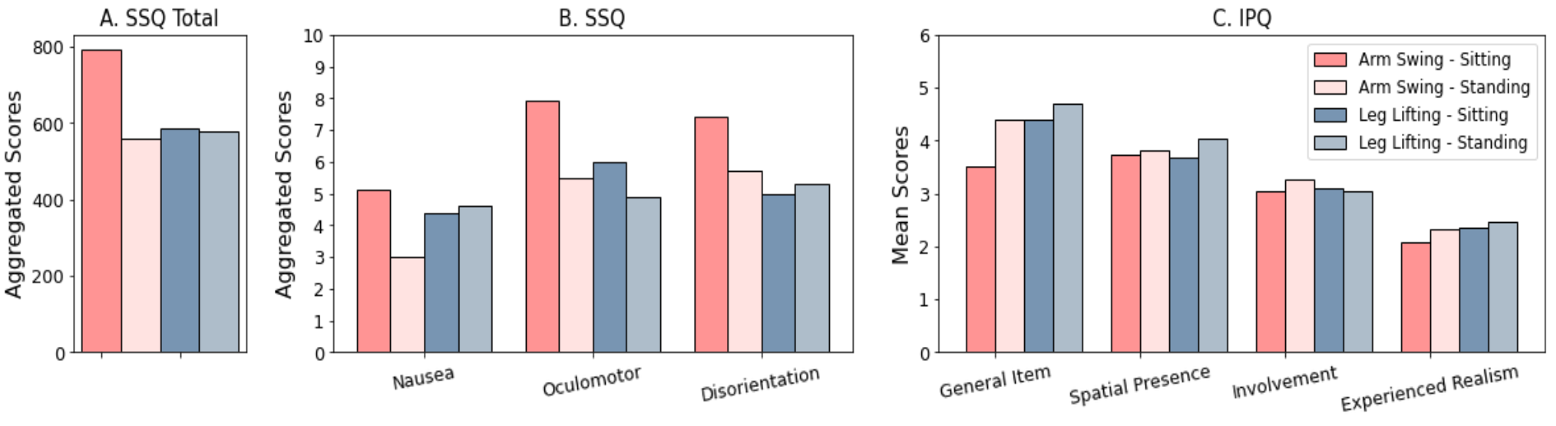

To achieve this, my team first conducted an user study (comparing posture and input styles using standard presence, engagement, and discomfort metrics) to inform input design decisions. Based on the findings from the study, we developed an upper-body-driven locomotion model using a dual-LSTM architecture: one network classifies walk/stop intent from arm motion, while the second predicts continuous rotation direction. The model enables users to walk and rotate naturally in VR without joysticks, teleportation, or physical displacement, preserving immersion while reducing motion sickness and spatial risk.

This system achieved high motion-intent recognition performance (97% for walk/stop, 82% for rotation direction), demonstrating that natural, learned motion patterns can replace handheld controllers without sacrificing control fidelity. This project was awarded mySUNI Creative Challenge (SK) 2022, recognizing its contribution toward a safer, more embodied, and universally accessible metaverse interaction paradigm.

Explore More Works and Projects!